Artificial intelligence (AI) has been the megatrend driving markets since ChatGPT launched three years ago.

One thing I’ve learned over the past decade is that disruptive megatrends can last longer than you can stay interested. And right now, I’m seeing more and more investors asking the same question:

“When does the AI boom end?”

It’s easy to be down on AI today. It sounds smart. But as we like to say at RiskHedge, “Pessimists sound smart; optimists make money.”

In truth, the AI train hasn’t slowed down at all. But 2025 marked a changing of the guard in where the real investment opportunities are showing up.

Big tech companies like Microsoft (MSFT), Amazon (AMZN), Meta Platforms (META), and Alphabet (GOOGL) are still doing all the spending. But for investors, the biggest gains are no longer coming from the obvious AI poster children.

They’re coming from the “plumbing” underneath the boom.

- The largest infrastructure buildout in history is still accelerating.

So far, the AI boom has been driven by the biggest infrastructure buildout the world has ever seen.

This year alone, big tech companies will spend north of $400 billion building AI data centers. Over the next decade, total dollars spent on compute will exceed spending on crude oil—making compute the largest commodity in the world.

The scale of these data centers is hard to fathom.

Meta’s Hyperion data center will be nearly 4X the size of Central Park once completed. OpenAI’s flagship Stargate project will consume 5 gigawatts of power—roughly enough to power a city the size of Philadelphia.

Some investors look at numbers like these and say, “There’s no way this is sustainable. AI is a bubble.”

But they’re missing two key points.

Unlike the dot‑com era—when 97% of fiber cables lay “dark”—there are no dark GPUs today.

Also, in the dot‑com boom, infrastructure was bought by debt-fueled startups with zero profits. Today, it’s being bought by the most profitable companies in history.

- But here’s the part of the story a lot of folks are missing…

No matter how much money big tech throws at AI, none of it matters unless the underlying infrastructure can keep up.

And right now, the “plumbing” side of the AI equation—power, cooling, memory, and data transmission—is starting to buckle under the strain.

For years, investors worried about whether companies like OpenAI could get their hands on enough chips. That’s no longer the issue.

Today, the biggest bottleneck isn’t superfast GPUs… but whether you can even turn them on.

- In other words, power has become #1 AI bottleneck.

Microsoft’s Satya Nadella recently said the company has GPUs sitting idle in racks because there isn’t enough electricity to turn them on. And that’s coming from a firm spending $100 billion on data centers.

In San Francisco, former OpenAI employee Andrew Mayne said Sam Altman told him OpenAI is currently 12 gigawatts underserved on power.

To put that into perspective: 1 gigawatt powers about 750,000 homes.

A decade ago, most data centers used under 10 megawatts. Today, Elon Musk’s xAI is building the world’s first 1‑gigawatt data center.

AI growth is no longer constrained by how many chips you can get your hands on, but by how quickly you can get power.

- To combat this growing problem, AI giants are going off-grid.

Today, America’s energy grid is slow, regulated, and outdated. (That’s what happens when you let power generation stagnate for 40 years. Oops.)

In fact, getting connected to the grid takes five years, on average. But AI can’t wait five years.

So the new blueprint is simple: go off‑grid and BYOP—bring your own power.

When building the “Colossus” cluster in Memphis, xAI installed 14 massive natural‑gas turbines on site. It went from a gutted factory to a fully operational, 100,000‑GPU data center in just 122 days.

To put that into perspective, it usually takes 234 days to build a single house.

- The biggest winners from AI may not be who you expect.

I’m a longtime nuclear bull. I also think solar and battery storage will play a big part in our future.

But AI companies need power yesterday—and right now, the fastest, most scalable option is natural gas.

That’s why natural gas and turbine makers like GE Vernova (GEV) will be big winners from the AI buildout for the foreseeable future.

Natural gas may not be totally “clean,” but you can set up a couple dozen gas turbines next to a data center and get your chips whirring fast.

Today, 43% of data centers are powered by natural gas. The next closest competitor is nuclear, at 19%. With today’s constraints, I expect gas’ share to grow in 2026.

You can see this show up directly in earnings results. Big tech companies have already ordered $900 million worth of turbines from GE Vernova in the past nine months alone!

AI—and all its promised riches—may be the only thing that can cut through all the red tape that’s made it nearly impossible to build real infrastructure in America.

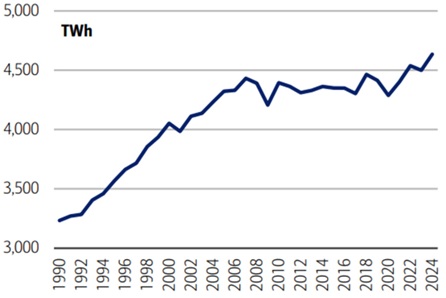

After flatlining for two decades, energy demand in the US is finally breaking out:

Source: Energy Institute

The countries that control compute will control AI. And you cannot have compute without energy.

Stephen McBride

Chief Analyst, RiskHedge

PS: Energy is just one of four plumbing “subsectors” I believe will drive the next wave of AI spending. In an upcoming article, I’ll reveal the other three.

And if you’d like to follow along with me as the new wave of AI spending gets underway, make sure you’re following me in my free letter, The Jolt. It’s where I discuss the biggest megatrends shaping markets—and how to invest in them. Sign up here today.